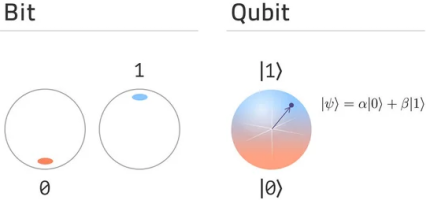

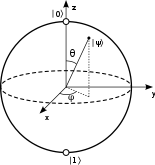

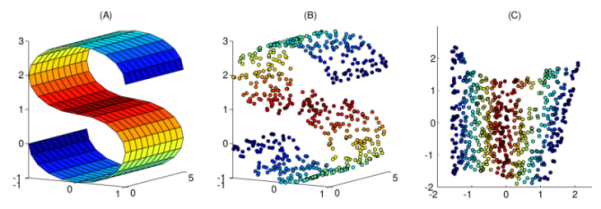

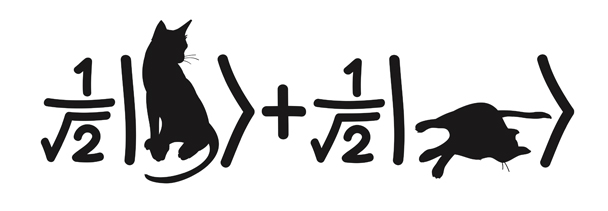

I continue with quantum computing exercises from Quantum Katas. In today’s post, I look at the concept of superposition. I’ll use Julia language with Yao quantum computing simulation package for completing exercises.

Following katas covers the topics on basic single-qubit and multi-qubit gates, superposition, and flow control.

Read More