Introduction

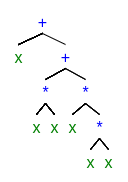

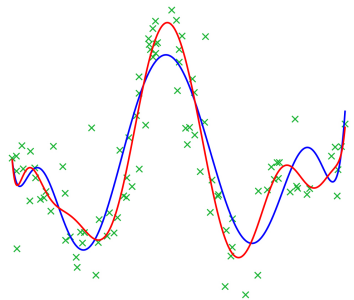

The goal of regression is to predict the value of one or more continuous target variables $t$ given the value of a $D$-dimensional vector $x$ of input variables. The polynomial is a specific example of a broad class of functions called linear regression models. The simplest form of linear regression models are also linear functions of the input variables. However, much more useful class of functions can be constructed by taking linear combinations of a fix set of nonlinear functions of the input variables, known as basis functions [1].

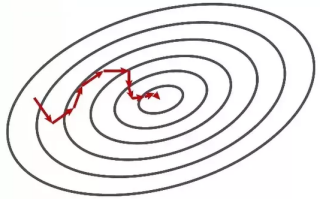

Regression models can be used for time series modeling. Typically, a regression model provides a projection from the baseline status to some relevant demographic variables. Curve-type time series data are quite common examples of these kinds of variables. Typical time series model is the ARMA model. It’s a combination of two types of time series data processes, namely, autoregressive and moving average processes.

Read More