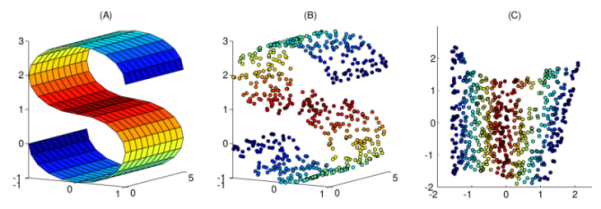

In statistical learning, many problems require initial preprocessing of multi-dimensional data, and often reduce dimensionality of the data, in a way, to compress features without loosing information about relevant data properties. Common linear dimensionality reduction methods, such as PCA or MDS, in many cases cannot properly reduce data dimensionality especially when data located around nonlinear manifold embedding in high-dimensional space.

There are many nonlinear dimensionality reduction (NLDR) methods for construction of low-dimensional manifold embeddings. A Julia language package ManifoldLearning.jl provides implementation of most common algorithms.

Read More