Bayesian Inference

Bayesian approach

There is a difference in our treatment of uncertainty.

Frequentist approach: all probabilities refer to random samples of data and possible long-run frequencies, and so do such concepts as unbiasedness, consistency, confidence level, and significance level.

Bayesian approach: Uncertainty is also attributed to the unknown parameter $\theta$, but some values of $\theta$ are more likely than others. It reflects our ideas, beliefs, and past experiences about the parameter before we collect and use the data.

Prior distribution is whole distribution of values of $\theta$.

Example 1

What do you think is the average starting annual salary of a Computer Science graduate? We can certainly collect data and compute an estimate of average salary.

Before that, we already have our beliefs on what the mean salary may be. We can express it as some distribution with the most likely range between 40,000 and 70,000.

Collected data may force us to change our initial idea about the unknown parameter.

Probabilities of different values of of $\theta$ may change. Then we'll have a posterior distribution of of $\theta$.

Prior and Posterior

There are two sources of information to use in Bayesian inference:

collected and observed data;

prior distribution of the parameter.

Prior to the experiment, our knowledge about the parameter $\theta$ is expressed in terms of the prior distribution, $\pi(\theta)$.

The observed sample of data $X = (X_1, \ldots, X_n)$ has distribution

Observed data add information about the parameter, so the updated knowledge about $\theta$ can be expressed as the posterior distribution.

Marginal Distribution

The denominator, $m(x)$, represents the unconditional distribution of data $X$. This is the marginal distribution of the sample $X$.

Being unconditional means that it is constant for different values of the parameter $\theta$. It can be computed by the Law of Total Probability or its continuous-case version.

or

Example 2

A manufacturer claims that the shipment contains only 5% of defective items, but the inspector feels that in fact it is 10%. We have to decide whether to accept or to reject the shipment based on $\theta$, the proportion of defective parts.

Before we see the real data, let's assign a 50-50 chance to both suggested values of $\theta$, i.e., $\pi(0.05) = \pi(0.10) = 0.5$.

A random sample of 20 parts has 3 defective ones. Calculate the posterior distribution of $\theta$.

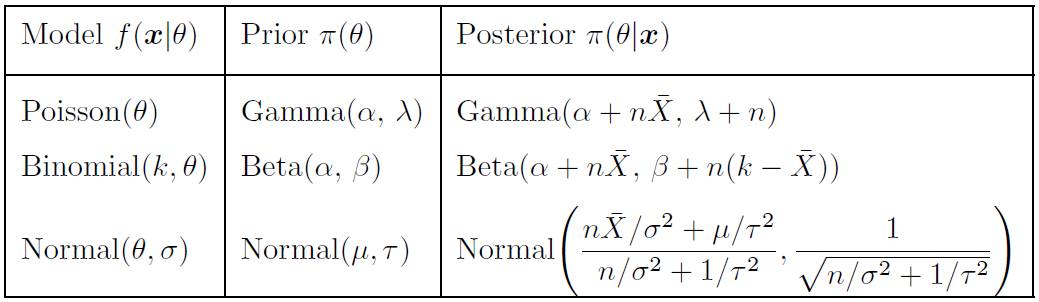

Conjugate Distribution Families

A family of prior distributions $\pi$ is conjugate to the model $f(x|\theta)$ if the posterior distribution belongs to the same family.

A suitably chosen prior distribution of $\theta$ may lead to a very tractable form of the posterior.

Gamma Conjugate Prior

Gamma family is conjugate to the Poisson model.

Let $(X_1, \ldots, X_n)$ be a sample from $Poisson(\theta)$ distribution with a $Gamma(\alpha, \lambda)$ prior distribution of $\theta$.

The Gamma prior distribution of $\theta$ has density

Then, the posterior distribution of $\theta$ given

Gamma Conjugate Prior (cont.)

Comparing with the general form of a $Gamma$ density, we see that $\pi(\theta|x)$ is the $Gamma$ distribution with new parameters,

and

Example 3

The number of network blackouts each week has $Poisson(\theta)$ distribution. The weekly rate of blackouts $\theta$ is not known exactly, but according to the past experience with similar networks, it averages 4 blackouts with a standard deviation of 2.

Classical Conjugate Families

Bayesian Estimation

To estimate $\theta$, we simply compute the posterior mean,

The result is a conditional expectation of $\theta$ given data $X$. In abstract terms, the Bayes estimator $\hat{\theta}_B$ is what we "expect" $\theta$ to be, after we observed a sample.

Accuracy

Estimator $\hat{\theta}_B = E\{\theta|x\}$ has the lowest squared-error posterior risk

For the Bayes estimator $\hat{\theta}_B$, posterior risk equals posterior variance.

which measures variability of $\theta$ around $\hat{\theta}_B$, according to the posterior distribution of $\theta$.

Example 4

Find the Bayes estimator for network blackouts in Example 3.

Example 5

Find the Bayes estimator for the quality enspection in Example 2.

Bayesian Credible Sets

Confidence intervals have a totally different meaning in Bayesian analysis. Having a posterior distribution of $\theta$, we no longer have to explain the confidence level $(1-\alpha)$ in terms of a long run of samples.

Set $C$ is a $(1-\alpha)100\%$ credible set for the parameter $\theta$ if the posterior probability for $\theta$ to belong to $C$ equals $(1 - \alpha)$. That is,

By minimizing the length of set $C$ we get highest posterior density credible set.

For the $Normal(\mu_x, \tau_x)$ posterior distribution of $\theta$, the $(1-\alpha)100\%$ HPD set is

Example 6

Find the HPD set for Example 1.