Multivariate Regression

Introduction

For simple regression, the predicted value depends on only one predictor variable:

For multiple regression, we write the regression model with more predictor variables:

Example 8

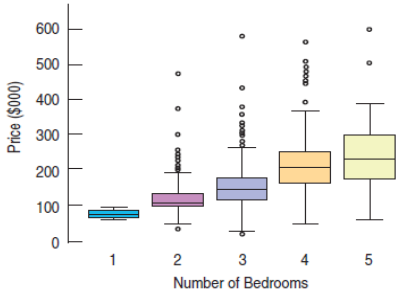

Home Price vs. Bedrooms, Saratoga Springs, NY. Random sample of 1057 homes. Can Bedrooms be used to predict Price?

Approximately linear relationship

Equal Spread Condition is violated.

Be cautious about using inferential methods on these data.

Example 8 (cont.)

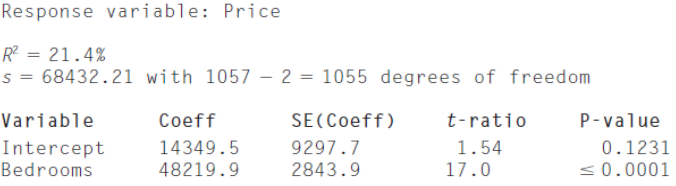

Price = 14,349.5.10 + 48,219.9 Bedrooms

The variation in Bedrooms accounts for only 21% of the variation in Price.

Perhaps the inclusion of another factor can account for a portion of the remaining variation.

Example 8 (cont.)

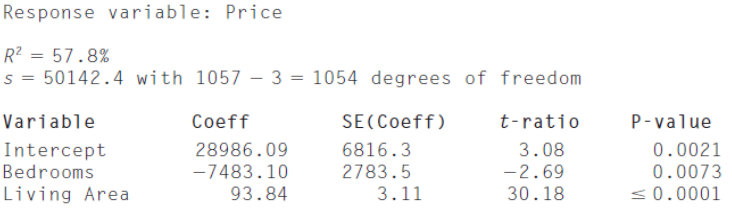

Price = 28,986.10 - 7,483.10 Bedrooms + 93.84 Living Area

Now the model accounts for 58% of the variation in Price.

Multivariate Linear Regression

A multivariate linear regression model assumes that the conditional expectation of a

$E {Y | X^{(1)}=x^{(1)}, \ldots, X^{(k)}=x^{(k)}} = b_0 + b_1 x^{(1)} + \cdots +b_k x^{(k)}$

This model defines a $k$-dimensional regression plane in a $(k + 1)$-dimensional space of $(X^{(1)}, \ldots, X^{(k)}, Y)$.

The intercept $\beta_0$ is the expected response when all predictors equal zero.

Each regression slope $\beta_j$ is the expected change of the response $Y$ when the corresponding predictor $X^{(j)}$ changes by 1 while all the other predictors remain constant.

Interpreting Multiple Regression Coefficients

NOTE: The meaning of the coefficients in multiple regression can be subtly different than in simple regression.

Price = 28,986.10 - 7,483.10 Bedrooms + 93.84 Living Area

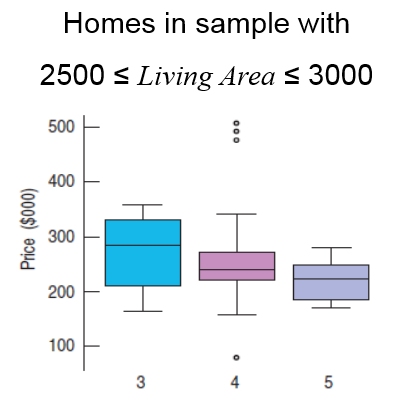

Price drops with increasing bedrooms? How can this be correct?

Interpreting Multiple Regression Coefficients (cont.)

In a multiple regression, each coefficient takes into account all the other predictor(s) in the model.

For houses with similar sized Living Areas, more bedrooms means smaller bedrooms and/or smaller common living space. Cramped rooms may decrease the value of a house.

Interpreting Multiple Regression Coefficients (cont.)

So, what's the correct answer to the question:

Do more bedrooms tend to increase or decrease the price of a home?

Correct answer:

"increase" if Bedrooms is the only predictor ("more bedrooms" may mean "bigger house", after all!)

"decrease" if Bedrooms increases for fixed Living Area ("more bedrooms" may mean "smaller, more-cramped rooms")

Summarizing: Multiple regression coefficients must be interpreted in terms of the other predictors in the model.

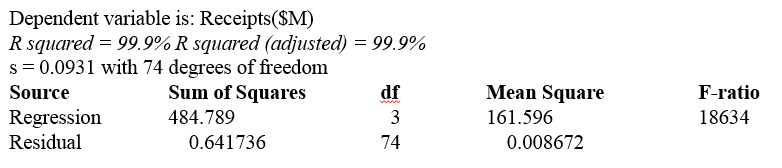

Example 9

On a typical night in New York City, about 25,000 people attend a Broadway show, paying an average price of more than 75 dollars per ticket. Data for most weeks of 2006-2008 consider the variables Paid Attendance, # Shows, Average Ticket Price(dollars) to predict Receipts. Consider the regression model for these variables.

Interpret the coefficient of Paid Attendance.

Estimate receipts when paid attendance was 200,000 customer attending 30 shows at an average ticket price of 70 dollars.

Is this likely to be a good prediction?

Why or why not?

Example 2 (cont.)

Example 2 (cont.)

Write the regression model for these variables.

Receipts = -18.32 + 0.076 Paid Attendance + 0.007 # Shows + 0.24 Average Ticket Price

Interpret the coefficient of Paid Attendance.

If the number of shows and ticket price are fixed, an increase of 1000 customers generates an average increase of $76,000 in receipts.

Estimate receipts when paid attendance was 200,000 customer attending 30 shows at an average ticket price of $70.

$13.89 million

Is this likely to be a good prediction?

Yes, R2 (adjusted) is 99.9% so this model explains most of the variability in Receipts.

Assumptions and Conditions

Linearity Assumption

Linearity Condition: Check each of the predictors. Also check the residual plot.

Independence Assumption

Randomization Condition: Does the data collection method introduce any bias?

Equal Variance Assumption

Equal Spread Condition: The variability of the errors should be about the same for each predictor.

Normality Assumption

Nearly Normal Condition: Check to see if the distribution of residuals is unimodal and symmetric.

Assumptions and Conditions (cont.)

Summary of Multiple Regression Model and Condition Checks:

Check Linearity Condition with a scatterplot for each predictor. If necessary, consider data re-expression.

If the Linearity Condition is satisfied, fit a multiple regression model to the data.

Find the residuals and predicted values.

Inspect a scatterplot of the residuals against the predicted values. Check for nonlinearity and non-uniform variation.

Think about how the data were collected.

Do you expect the data to be independent?

Was suitable randomization utilized?

Are the data representative of a clearly identifiable population?

Is autocorrelation an issue?

Assumptions and Conditions (cont.)

If the conditions check, feel free to interpret the regression model and use it for prediction.

Check the Nearly Normal Condition by inspecting a residual distribution histogram and a Normal plot. If the sample size is large, the Normality is less important for inference. Watch for skewness and outliers.

Least Squares Estimation

According to the method of least squares, we need to find slopes $\beta_1, \ldots, \beta_k$ and an intercept $\beta_0$ such that they will minimize the sum of squared "errors"

TO minimizing $Q$, we take partial derivatives of $Q$ with respect to all the unknown parameters and solve the resulting system of equations.

Matrix Approach to Multivariate Linear Regression

Then the multivariate regression model can be viewed as

where $\beta = \begin{bmatrix} \beta_0 \\ \beta_1 \\ \vdots \\ \beta_k \end{bmatrix} \in \, \mathbb{R}^{k+1}$

Matrix Approach (cont.)

Our goal is to estimate $\beta$ with a vector of sample regression slopes $\mathbf{b} = \begin{bmatrix} b_0 \\ b_1 \\ \vdots \\ b_k \end{bmatrix}$

Fitted values will then be computed as

Matrix Approach (cont.)

Thus, the least squares problem reduces to minimizing

Analysis of Variance

The total sum of squares is still

with $df_{TOT} = (n - 1)$ degrees of freedom, where

Analysis of Variance (cont.)

The regression sum of squares is

with $df_{REG} = k$ degrees of freedom.

The error sum of squares is

with $df_{ERR} = n - k -1$ degrees of freedom.

- \[n\]

= number of observations

- \[k\]

= number of predictor variables

Residuals

Coefficient of determination

F-statistic

Standard deviation of residuals

Variance Estimator

For the inference about individual regression slopes $\beta_j$, we compute all the variances $Var(\beta_j)$.

Diagonal elements of this $k \times k$ matrix are variances of individual regression slopes,

Testing the Multiple Regression Model

There are several hypothesis tests in multiple regression

Each is concerned with whether the underlying parameters (slopes and intercept) are actually zero.

The hypothesis for slope coefficients:

Test the hypothesis with an F-test (a generalization of the t-test to more than one predictor).

Testing the Multiple Regression Model (cont.)

The F-distribution has two degrees of freedom:

- \[k\]

, where $k$ is the number of predictors

- \[n-k-1\]

, where $n$ is the number of observations

The F-test is one-sided, so bigger F-values mean smaller P-values.

If the null hypothesis is true, then F will be near 1.

Testing the Multiple Regression Model (cont.)

If a multiple regression F-test leads to a rejection of the null hypothesis, then check the t-test statistic for each coefficient:

Note that the degrees of freedom for the t-test is $n - k - 1$.

Confidence interval:

Testing the Multiple Regression Model (cont.)

In Multiple Regression, it looks like each $\beta_j$ tells us the effect of its associated predictor, $x_j$.

BUT

The coefficient $\beta_j$ can be different from zero even when there is no correlation between $y$ and $x_j$.

It is even possible that the multiple regression slope changes sign when a new variable enters the regression.

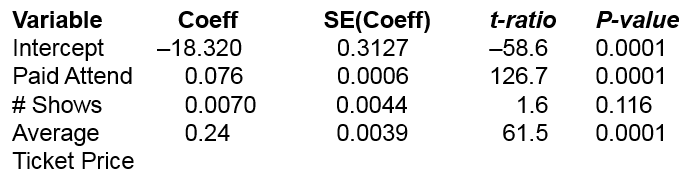

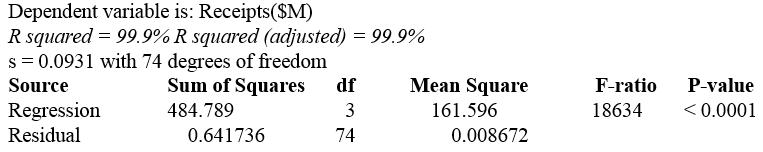

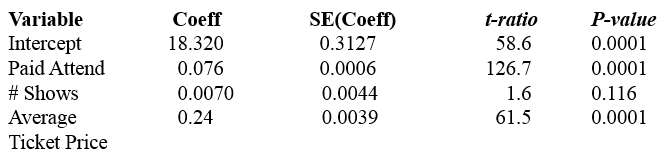

Example 10

On a typical night in New York City, about 25,000 people attend a Broadway show, paying an average price of more than 75 dollars per ticket. The variables Paid Attendance, # Shows, Average Ticket Price(dollars) to predict Receipts.

State hypothesis, the test statistic and p-value, and draw a conclusion for an F-test for the overall model.

Example 10 (cont.)

State hypothesis for an F-test for the overall model.

State the test statistic and p-value.

The F-statistic is the F-ratio = 18634. The p-value is < 0.0001.

The p-value is small, so reject the null hypothesis. At least one of the predictors accounts for enough variation in y to be useful.

Example 10 (cont.)

Since the F-ratio suggests that at least one variable is a useful predictor, determine which of the following variables contribute in the presence of the others.

Paid Attendance (p = 0.0001) and Average Ticket Price (p = 0.0001) both contribute, even when all other variables are in the model.

# Shows however, is not significant (p = 0.116) and should be removed from the model.

ANOVA F-test

ANOVA F-test in multivariate regression tests significance of the entire model. The model is significant as long as at least one slope is not zero.

We compute the F-statistic

and check it against the F-distribution with $k$ and $(n - k - 1)$ degrees of freedom.

So, testing whether $F = 0$ is equivalent to testing whether $R^2 = 0$.

Adjusted R-square

Adding new predictor variables to a model never decreases $R^2$ and may increase it.

But each added variable increases the model complexity, which may not be desirable.

Adjusted $R^2$ imposes a "penalty" on the correlation strength of larger models, depreciating their $R^2$ values to account for an undesired increase in complexity:

Adjusted $R^2$ permits a more equitable comparison between models of different sizes.